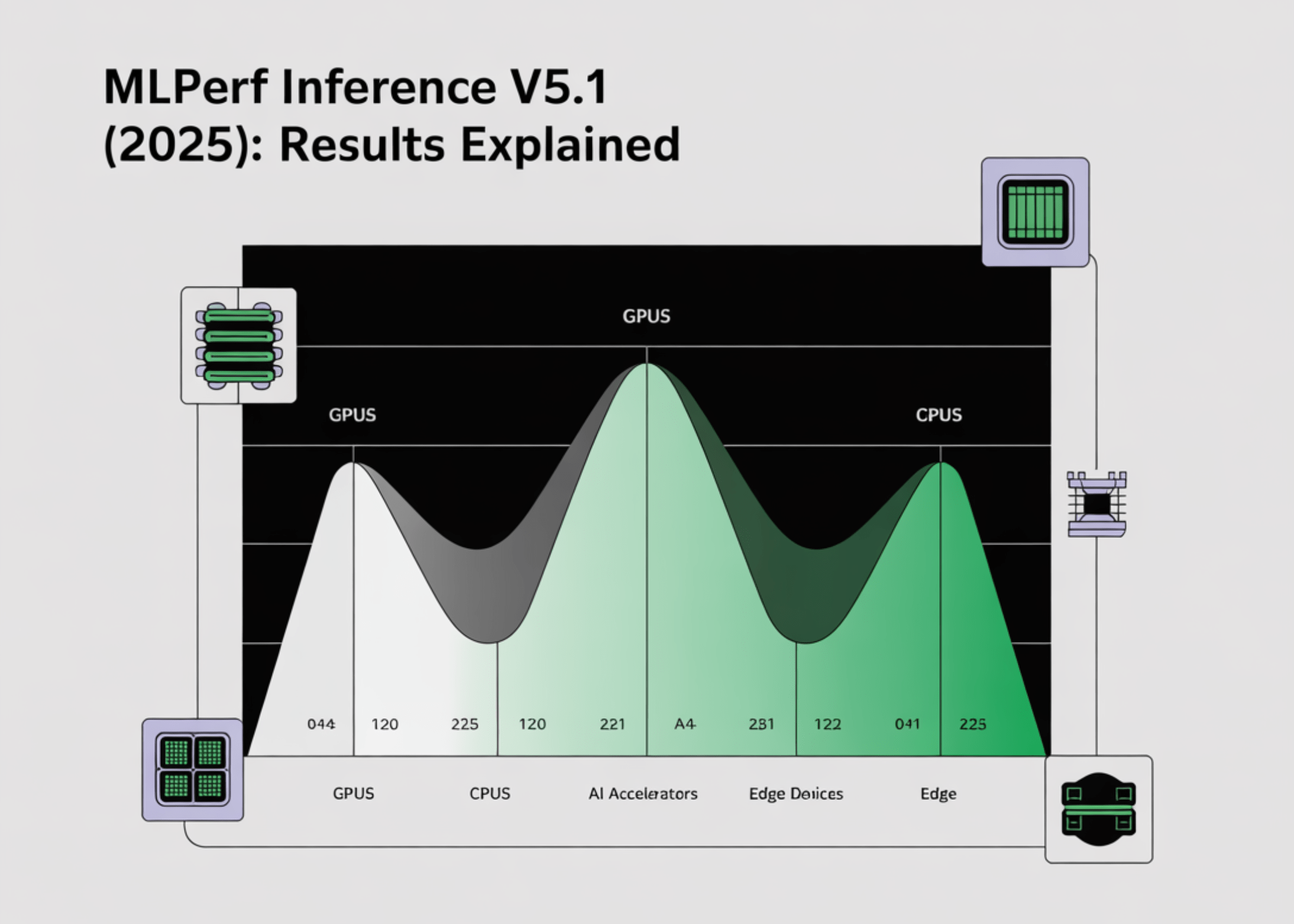

What MLPerf Inference Actually Measures?

MLPerf Inference quantifies how fast a complete system (hardware + runtime + serving stack) executes fixed, pre-trained models under strict latency and accuracy constraints. Results are reported for the Datacenter and Edge suites with standardized request patterns (“scenarios”) generated by LoadGen, ensuring architectural neutrality and reproducibility. The Closed division fixes the model and preprocessing for apples-to-apples comparisons; the Open division allows model changes that are not strictly comparable. Availability tags—Available, Preview, RDI (research/development/internal)—indicate whether configurations are shipping or experimental.

The 2025 Update (v5.0 → v5.1): What Changed?

The v5.1 results (published Sept 9, 2025) add three modern workloads and broaden interactive serving:

- DeepSeek-R1 (first reasoning benchmark)

- Llama-3.1-8B (summarization) replacing GPT-J

- Whisper Large V3 (ASR)

This round recorded 27 submitters and first-time appearances of AMD Instinct MI355X, Intel Arc Pro B60 48GB Turbo, NVIDIA GB300, RTX 4000 Ada-PCIe-20GB, and RTX Pro 6000 Blackwell Server Edition. Interactive scenarios (tight TTFT/TPOT limits) were expanded beyond a single model to capture agent/chat workloads.

Scenarios: The Four Serving Patterns You Must Map to Real Workloads

- Offline: maximize throughput, no latency bound—batching and scheduling dominate.

- Server: Poisson arrivals with p99 latency bounds—closest to chat/agent backends.

- Single-Stream / Multi-Stream (Edge emphasis): strict per-stream tail latency; Multi-Stream stresses concurrency at fixed inter-arrival intervals.

Each scenario has a defined metric (e.g., max Poisson throughput for Server; throughput for Offline).

Latency Metrics for LLMs: TTFT and TPOT Are Now First-Class

LLM tests report TTFT (time-to-first-token) and TPOT (time-per-output-token). v5.0 introduced stricter interactive limits for Llama-2-70B (p99 TTFT 450 ms, TPOT 40 ms) to reflect user-perceived responsiveness. The long-context Llama-3.1-405B keeps higher bounds (p99 TTFT 6 s, TPOT 175 ms) due to model size and context length. These constraints carry into v5.1 alongside new LLM and reasoning tasks.

Key v5.1 entries and their quality/latency gates (abbrev.):

- LLM Q&A – Llama-2-70B (OpenOrca): Conversational 2000 ms/200 ms; Interactive 450 ms/40 ms; 99% and 99.9% accuracy targets.

- LLM Summarization – Llama-3.1-8B (CNN/DailyMail): Conversational 2000 ms/100 ms; Interactive 500 ms/30 ms.

- Reasoning – DeepSeek-R1: TTFT 2000 ms / TPOT 80 ms; 99% of FP16 (exact-match baseline).

- ASR – Whisper Large V3 (LibriSpeech): WER-based quality (datacenter + edge).

- Long-context – Llama-3.1-405B: TTFT 6000 ms, TPOT 175 ms.

- Image – SDXL 1.0: FID/CLIP ranges; Server has a 20 s constraint.

Legacy CV/NLP (ResNet-50, RetinaNet, BERT-L, DLRM, 3D-UNet) remain for continuity.

Power Results: How to Read Energy Claims

MLPerf Power (optional) reports system wall-plug energy for the same runs (Server/Offline: system power; Single/Multi-Stream: energy per stream). Only measured runs are valid for energy efficiency comparisons; TDPs and vendor estimates are out-of-scope. v5.1 includes datacenter and edge power submissions but broader participation is encouraged.

How To Read the Tables Without Fooling Yourself?

- Compare Closed vs Closed only; Open runs may use different models/quantization.

- Match accuracy targets (99% vs 99.9%)—throughput often drops at stricter quality.

- Normalize cautiously: MLPerf reports system-level throughput under constraints; dividing by accelerator count yields a derived “per-chip” number that MLPerf does not define as a primary metric. Use it only for budgeting sanity checks, not marketing claims.

- Filter by Availability (prefer Available) and include Power columns when efficiency matters.

Interpreting 2025 Results: GPUs, CPUs, and Other Accelerators

GPUs (rack-scale to single-node). New silicon shows up prominently in Server-Interactive (tight TTFT/TPOT) and in long-context workloads where scheduler & KV-cache efficiency matter as much as raw FLOPs. Rack-scale systems (e.g., GB300 NVL72 class) post the highest aggregate throughput; normalize by both accelerator and host counts before comparing to single-node entries, and keep scenario/accuracy identical.

CPUs (standalone baselines + host effects). CPU-only entries remain useful baselines and highlight preprocessing and dispatch overheads that can bottleneck accelerators in Server mode. New Xeon 6 results and mixed CPU+GPU stacks appear in v5.1; check host generation and memory configuration when comparing systems with similar accelerators.

Alternative accelerators. v5.1 increases architectural diversity (GPUs from multiple vendors plus new workstation/server SKUs). Where Open-division submissions appear (e.g., pruned/low-precision variants), validate that any cross-system comparison holds constant division, model, dataset, scenario, and accuracy.

Practical Selection Playbook (Map Benchmarks to SLAs)

- Interactive chat/agents → Server-Interactive on Llama-2-70B/Llama-3.1-8B/DeepSeek-R1 (match latency & accuracy; scrutinize p99 TTFT/TPOT).

- Batch summarization/ETL → Offline on Llama-3.1-8B; throughput per rack is the cost driver.

- ASR front-ends → Whisper V3 Server with tail-latency bound; memory bandwidth and audio pre/post-processing matter.

- Long-context analytics → Llama-3.1-405B; evaluate if your UX tolerates 6 s TTFT / 175 ms TPOT.

What the 2025 Cycle Signals?

- Interactive LLM serving is table-stakes. Tight TTFT/TPOT in v5.x makes scheduling, batching, paged attention, and KV-cache management visible in results—expect different leaders than in pure Offline.

- Reasoning is now benchmarked. DeepSeek-R1 stresses control-flow and memory traffic differently from next-token generation.

- Broader modality coverage. Whisper V3 and SDXL exercise pipelines beyond token decoding, surfacing I/O and bandwidth limits.

Summary

In summary, MLPerf Inference v5.1 makes inference comparisons actionable only when grounded in the benchmark’s rules: align on the Closed division, match scenario and accuracy (including LLM TTFT/TPOT limits for interactive serving), and prefer Available systems with measured Power to reason about efficiency; treat any per-device splits as derived heuristics because MLPerf reports system-level performance. The 2025 cycle expands coverage with DeepSeek-R1, Llama-3.1-8B, and Whisper Large V3, plus broader silicon participation, so procurement should filter results to the workloads that mirror production SLAs—Server-Interactive for chat/agents, Offline for batch—and validate claims directly in the MLCommons result pages and power methodology.

References: