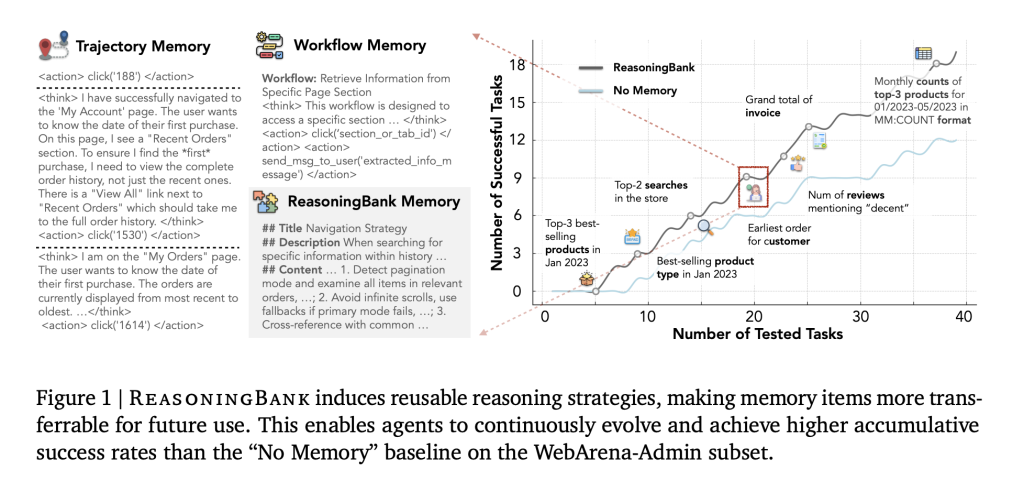

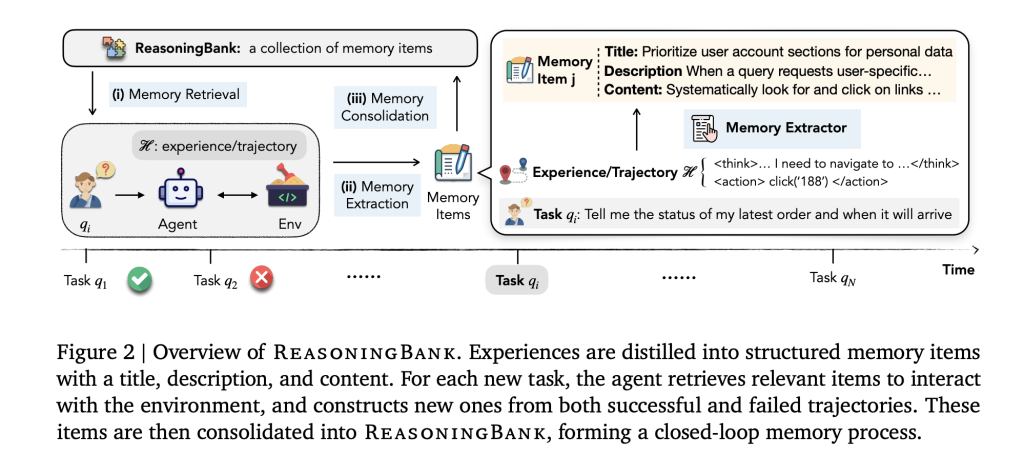

How do you make an LLM agent actually learn from its own runs—successes and failures—without retraining? Google Research proposes ReasoningBank, an AI agent memory framework that converts an agent’s own interaction traces—both successes and failures—into reusable, high-level reasoning strategies. These strategies are retrieved to guide future decisions, and the loop repeats so the agent self-evolves. Coupled with memory-aware test-time scaling (MaTTS), the approach delivers up to +34.2% relative effectiveness gains and –16% fewer interaction steps across web and software-engineering benchmarks compared to prior memory designs that store raw trajectories or success-only workflows.

So, what is the problem?

LLM agents tackle multi-step tasks (web browsing, computer use, repo-level bug fixing) but generally fail to accumulate and reuse experience. Conventional “memory” tends to hoard raw logs or rigid workflows. Those are brittle across environments and often ignore useful signals from failures—where a lot of actionable knowledge lives. ReasoningBank reframes memory as compact, human-readable strategy items that are easier to transfer between tasks and domains.

Then how does it tackle?

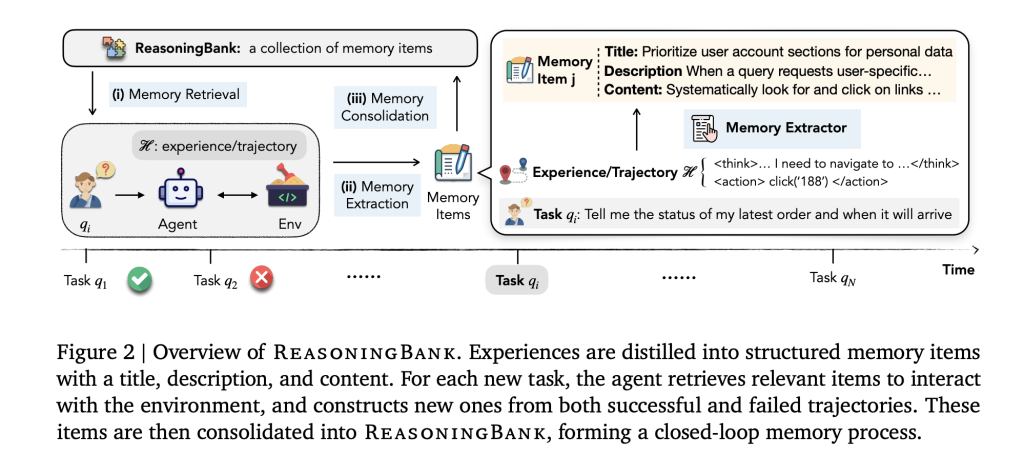

Each experience is distilled into a memory item with a title, one-line description, and content containing actionable principles (heuristics, checks, constraints). Retrieval is embedding-based: for a new task, top-k relevant items are injected as system guidance; after execution, new items are extracted and consolidated back. The loop is intentionally simple—retrieve → inject → judge → distill → append—so improvements can be attributed to the abstraction of strategies, not heavy memory management.

Why it transfers: items encode reasoning patterns (“prefer account pages for user-specific data; verify pagination mode; avoid infinite scroll traps; cross-check state with task spec”), not website-specific DOM steps. Failures become negative constraints (“do not rely on search when the site disables indexing; confirm save state before navigation”), which prevents repeated mistakes.

Memory-aware test-time scaling (MaTTS) proposed as well!

Test-time scaling (running more rollouts or refinements per task) is effective only if the system can learn from the extra trajectories. The research team also propsoed Memory-aware test-time scaling (MaTTS) that integrates scaling with ReasoningBank:

- Parallel MaTTS: generate (k) rollouts in parallel, then self-contrast them to refine strategy memory.

- Sequential MaTTS: iteratively self-refine a single trajectory, mining intermediate notes as memory signals.

The synergy is two-way: richer exploration produces better memory; better memory steers exploration toward promising branches. Empirically, MaTTS yields stronger, more monotonic gains than vanilla best-of-N without memory.

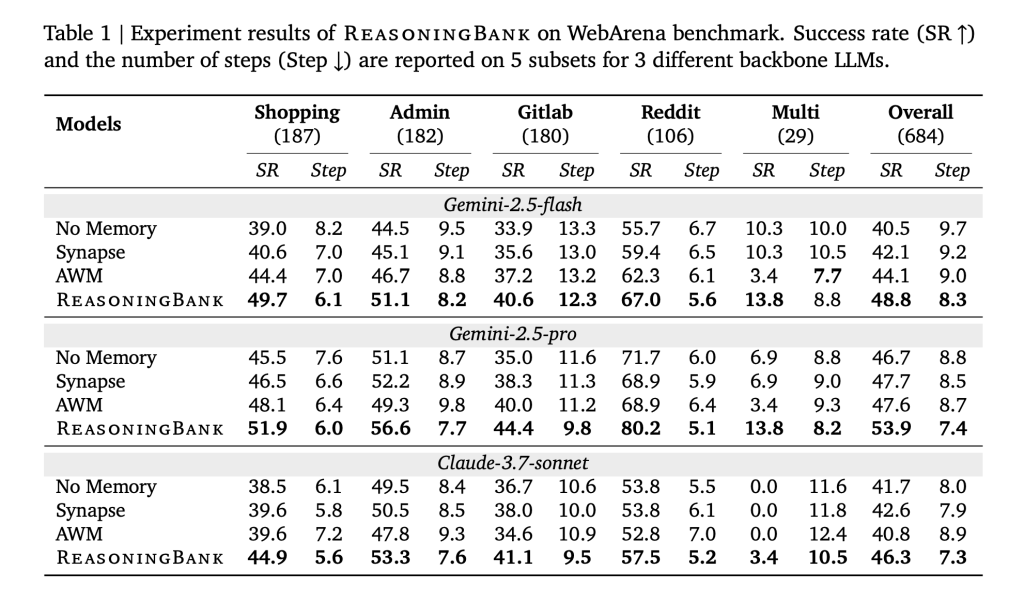

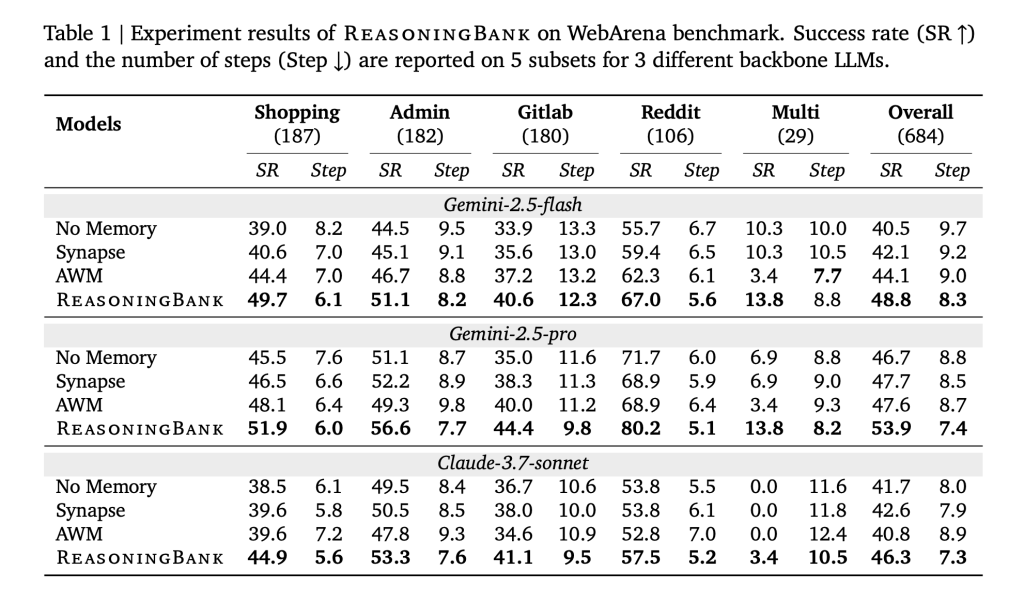

So, how good are these proposed research frameworks?

- Effectiveness: ReasoningBank + MaTTS improves task success up to 34.2% (relative) over no-memory and outperforms prior memory designs that reuse raw traces or success-only routines.

- Efficiency: Interaction steps drop by 16% overall; further analysis shows the largest reductions on successful trials, indicating fewer redundant actions rather than premature aborts.

Where does this fits in the agent stack?

ReasoningBank is a plug-in memory layer for interactive agents that already use ReAct-style decision loops or best-of-N test-time scaling. It doesn’t replace verifiers/planners; it amplifies them by injecting distilled lessons at the prompt/system level. On web tasks, it complements BrowserGym/WebArena/Mind2Web; on software tasks, it layers atop SWE-Bench-Verified setups.

Check out the Paper here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.