IBM Released new Granite 4.0 Models with a Novel Hybrid Mamba-2/Transformer Architecture: Drastically Reducing Memory Use without Sacrificing Performance

IBM just released Granite 4.0, an open-source LLM family that swaps monolithic Transformers for a hybrid Mamba-2/Transformer stack to cut serving memory while keeping quality. Sizes span a 3B dense…

ServiceNow AI Releases Apriel-1.5-15B-Thinker: An Open-Weights Multimodal Reasoning Model that Hits Frontier-Level Performance on a Single-GPU Budget

ServiceNow AI Research Lab has released Apriel-1.5-15B-Thinker, a 15-billion-parameter open-weights multimodal reasoning model trained with a data-centric mid-training recipe—continual pretraining followed by supervised fine-tuning—without reinforcement learning or preference optimization. The…

Liquid AI Released LFM2-Audio-1.5B: An End-to-End Audio Foundation Model with Sub-100 ms Response Latency

Liquid AI has released LFM2-Audio-1.5B, a compact audio–language foundation model that both understands and generates speech and text through a single end-to-end stack. It positions itself for low-latency, real-time assistants…

The Role of Model Context Protocol (MCP) in Generative AI Security and Red Teaming

Overview Model Context Protocol (MCP) is an open, JSON-RPC–based standard that formalizes how AI clients (assistants, IDEs, web apps) connect to servers exposing three primitives—tools, resources, and prompts—over defined transports…

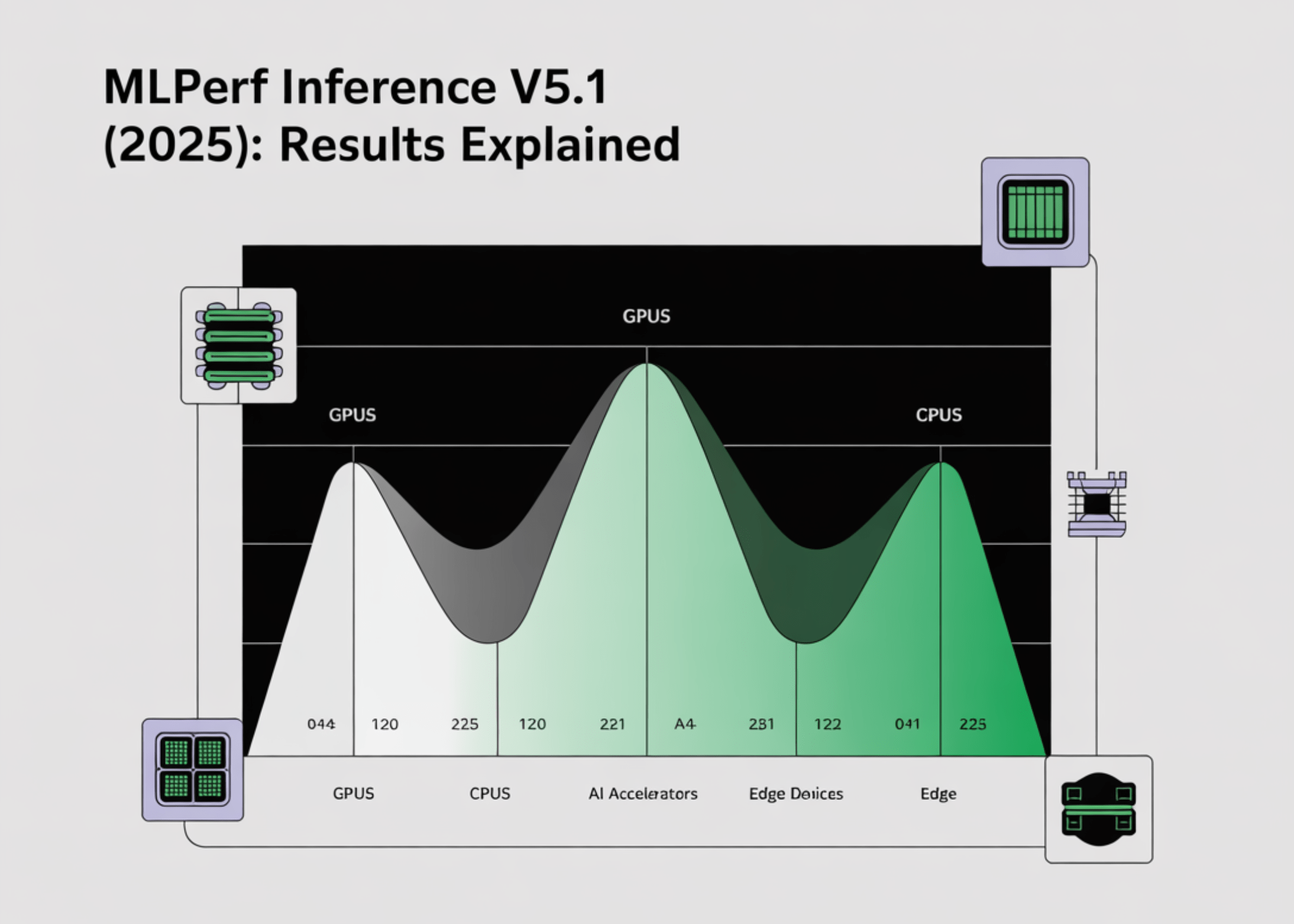

MLPerf Inference v5.1 (2025): Results Explained for GPUs, CPUs, and AI Accelerators

What MLPerf Inference Actually Measures? MLPerf Inference quantifies how fast a complete system (hardware + runtime + serving stack) executes fixed, pre-trained models under strict latency and accuracy constraints. Results…

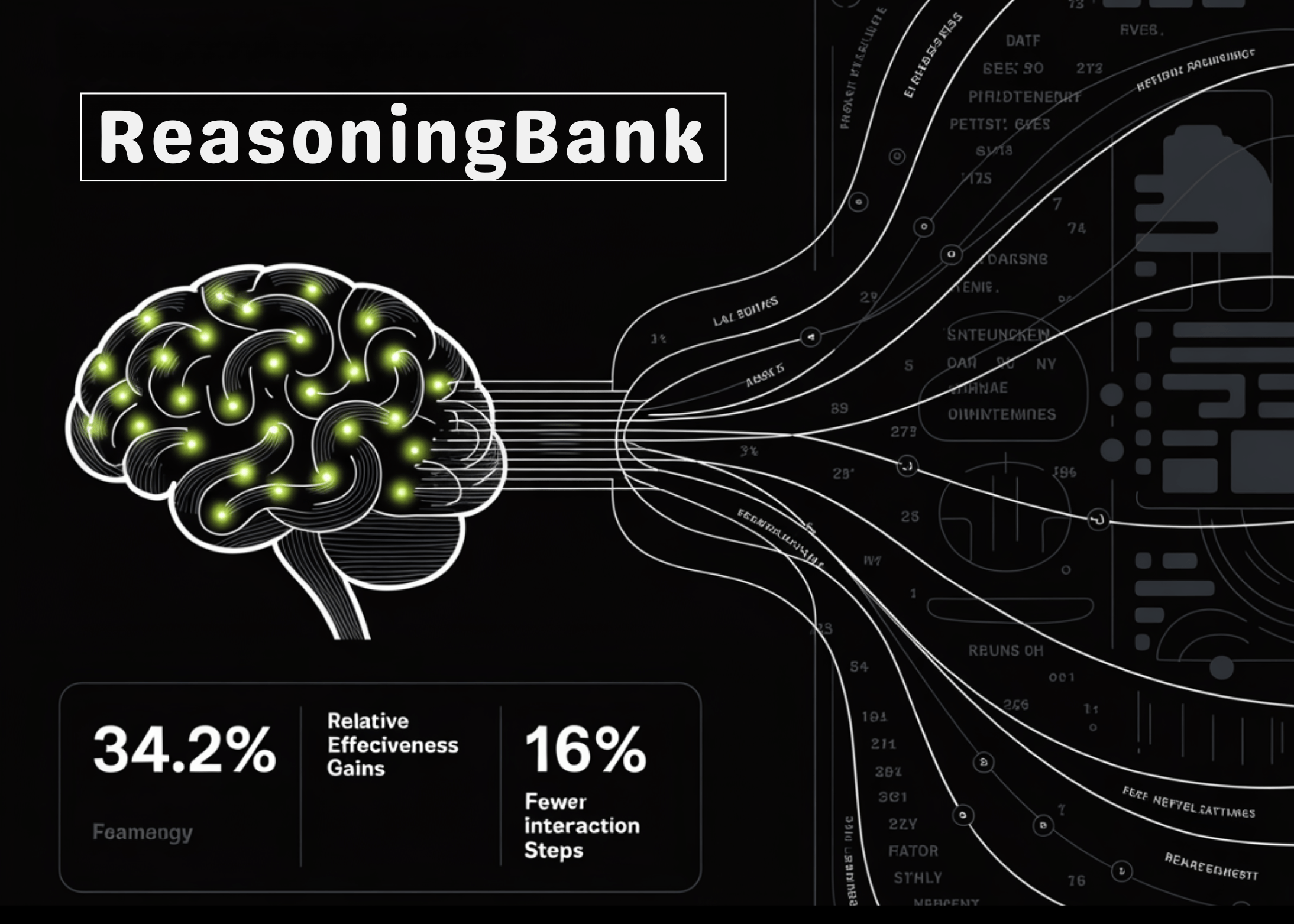

Google AI Proposes ReasoningBank: A Strategy-Level I Agent Memory Framework that Makes LLM Agents Self-Evolve at Test Time

How do you make an LLM agent actually learn from its own runs—successes and failures—without retraining? Google Research proposes ReasoningBank, an AI agent memory framework that converts an agent’s own…

How to Build an Advanced Agentic Retrieval-Augmented Generation (RAG) System with Dynamic Strategy and Smart Retrieval?

In this tutorial, we walk through the implementation of an Agentic Retrieval-Augmented Generation (RAG) system. We design it so that the agent does more than just retrieve documents; it actively…

Zhipu AI Releases GLM-4.6: Achieving Enhancements in Real-World Coding, Long-Context Processing, Reasoning, Searching and Agentic AI

Zhipu AI has released GLM-4.6, a major update to its GLM series focused on agentic workflows, long-context reasoning, and practical coding tasks. The model raises the input window to 200K…

OpenAI Launches Sora 2 and a Consent-Gated Sora iOS App

OpenAI released Sora 2, a text-to-video-and-audio model focused on physical plausibility, multi-shot controllability, and synchronized dialogue/SFX. The OpenAI team has also launched a new invite-only Sora iOS app (U.S. and…

DeepSeek V3.2-Exp Cuts Long-Context Costs with DeepSeek Sparse Attention (DSA) While Maintaining Benchmark Parity

DeepSeek released DeepSeek-V3.2-Exp, an “intermediate” update to V3.1 that adds DeepSeek Sparse Attention (DSA)—a trainable sparsification path aimed at long-context efficiency. DeepSeek also reduced API prices by 50%+, consistent with…