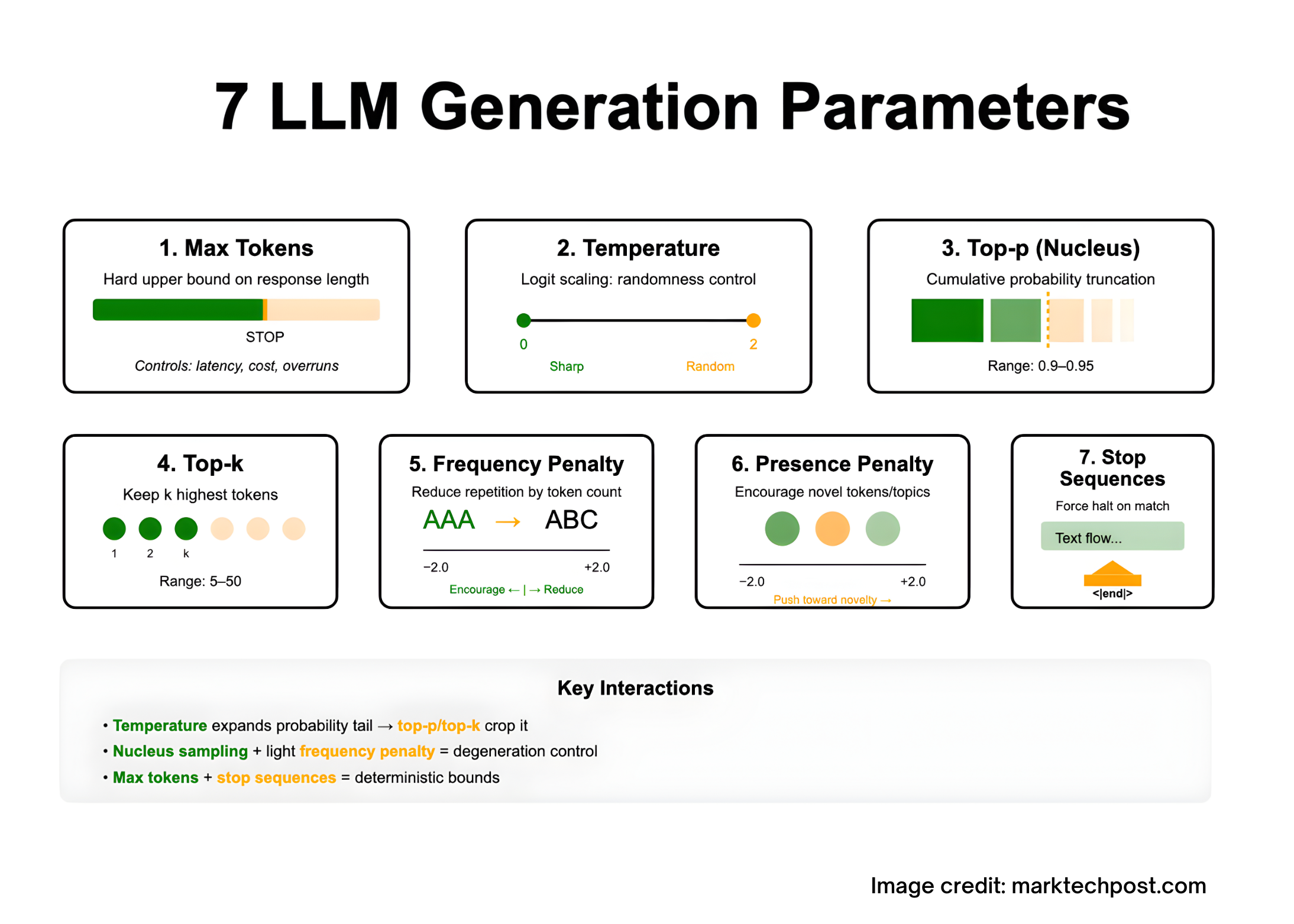

Tuning LLM outputs is largely a decoding problem: you shape the model’s next-token distribution with a handful of sampling controls—max tokens (caps response length under the model’s context limit), temperature (logit scaling for more/less randomness), top-p/nucleus and top-k (truncate the candidate set by probability mass or rank), frequency and presence penalties (discourage repetition or encourage novelty), and stop sequences (hard termination on delimiters). These seven parameters interact: temperature widens the tail that top-p/top-k then crop; penalties mitigate degeneration during long generations; stop plus max tokens provides deterministic bounds. The sections below define each parameter precisely and summarize vendor-documented ranges and behaviors grounded in the decoding literature.

1) Max tokens (a.k.a. max_tokens, max_output_tokens, max_new_tokens)

What it is: A hard upper bound on how many tokens the model may generate in this response. It doesn’t expand the context window; the sum of input tokens and output tokens must still fit within the model’s context length. If the limit hits first, the API marks the response “incomplete/length.”

When to tune:

- Constrain latency and cost (tokens ≈ time and $$).

- Prevent overruns past a delimiter when you cannot rely solely on

stop.

2) Temperature (temperature)

What it is: A scalar applied to logits before softmax:

softmax(z/T)i=∑jezj/Tezi/T

Lower T sharpens the distribution (more deterministic); higher T flattens it (more random). Typical public APIs expose a range near [0,2][0, 2][0,2]. Use low T for analytical tasks and higher T for creative expansion.

3) Nucleus sampling (top_p)

What it is: Sample only from the smallest set of tokens whose cumulative probability mass ≥ p. This truncates the long low-probability tail that drives classic “degeneration” (rambling, repetition). Introduced as nucleus sampling by Holtzman et al. (2019).

Practical notes:

- Common operational band for open-ended text is

top_p ≈ 0.9–0.95(Hugging Face guidance). - Anthropic advises tuning either

temperatureortop_p, not both, to avoid coupled randomness.

4) Top-k sampling (top_k)

What it is: At each step, restrict candidates to the k highest-probability tokens, renormalize, then sample. Earlier work (Fan, Lewis, Dauphin, 2018) used this to improve novelty vs. beam search. In modern toolchains it’s often combined with temperature or nucleus sampling.

Practical notes:

- Typical

top_kranges are small (≈5–50) for balanced diversity; HF docs show this as “pro-tip” guidance. - With both

top_kandtop_pset, many libraries apply k-filtering then p-filtering (implementation detail, but useful to know).

5) Frequency penalty (frequency_penalty)

What it is: Decreases the probability of tokens proportionally to how often they already appeared in the generated context, reducing verbatim repetition. Azure/OpenAI reference specifies the range −2.0 to +2.0 and defines the effect precisely. Positive values reduce repetition; negative values encourage it.

When to use: Long generations where the model loops or echoes phrasing (e.g., bullet lists, poetry, code comments).

6) Presence penalty (presence_penalty)

What it is: Penalizes tokens that have appeared at least once so far, encouraging the model to introduce new tokens/topics. Same documented range −2.0 to +2.0 in Azure/OpenAI reference. Positive values push toward novelty; negative values condense around seen topics.

Tuning heuristic: Start at 0; nudge presence_penalty upward if the model stays too “on-rails” and won’t explore alternatives.

7) Stop sequences (stop, stop_sequences)

What it is: Strings that force the decoder to halt exactly when they appear, without emitting the stop text. Useful for bounding structured outputs (e.g., end of JSON object or section). Many APIs allow multiple stop strings.

Design tips: Pick unambiguous delimiters unlikely to occur in normal text (e.g., "<|end|>", "\n\n###"), and pair with max_tokens as a belt-and-suspenders control.

Interactions that matter

- Temperature vs. Nucleus/Top-k: Raising temperature expands probability mass into the tail;

top_p/top_kthen crop that tail. Many providers recommend adjusting one randomness control at a time to keep the search space interpretable. - Degeneration control: Empirically, nucleus sampling alleviates repetition and blandness by truncating unreliable tails; combine with light frequency penalty for long outputs.

- Latency/cost:

max_tokensis the most direct lever; streaming the response doesn’t change cost but improves perceived latency. ( - Model differences: Some “reasoning” endpoints restrict or ignore these knobs (temperature, penalties, etc.). Check model-specific docs before porting configs.

References:

- https://arxiv.org/abs/1904.09751

- https://openreview.net/forum?id=rygGQyrFvH

- https://huggingface.co/docs/transformers/en/generation_strategies

- https://huggingface.co/docs/transformers/en/main_classes/text_generation

- https://arxiv.org/abs/1805.04833

- https://aclanthology.org/P18-1082.pdf

- https://help.openai.com/en/articles/5072263-how-do-i-use-stop-sequences

- https://platform.openai.com/docs/api-reference/introduction

- https://docs.aws.amazon.com/bedrock/latest/userguide/model-parameters-anthropic-claude-messages-request-response.html

- https://cloud.google.com/vertex-ai/generative-ai/docs/multimodal/content-generation-parameters

- https://cloud.google.com/vertex-ai/generative-ai/docs/learn/prompts/adjust-parameter-values

- https://learn.microsoft.com/en-us/azure/ai-foundry/openai/how-to/reasoning